What is a Container?

Hello! Today, we are talking about two things:

- the last update of the Docusaurus library which now allows me to provide you with my blog in 2 languages!

- the containers and their usefulness in software development.

Docusaurus

What is it?

Docusaurus is an open-source optimized website generator packaging several interesting features for online content creators:

- documentation and blog posts in Markdown ;

- internationalization ;

- versioning ;

- content search highly adapted (thanks to the integration of Algolia).

Why Docusaurus?

Indeed, you might ask the question. Why choosing Docusaurus when there are plenty of other site generators such as Wix, Shopify, Weebly, ... or more axed on a developer mindset such as Next.js, Hugo, Gatsby, and so on...

Well, I kind of discovered it by pure chance when looking for a documentation generator for .NET (by the way, I found it: Docfx). However, I personnaly chose Docusaurus for my personal projects for 3 reasons: it is...

- open-source (it means I can contribute, consult its source code, etc.),

- developed in React (I love this library),

- easy to use (in around 3 minutes, you can have a functional and customized website).

This website is generated with Docusaurus

If you haven't noticed it yet, this blog is generated with Docusaurus v2. You can even find the source code here on Github. If you see any typo, please open an issue!

Okay, let's move on to the next and main topic: our introduction to containers.

The containers

What are we talking about?

One of the many problems in software development is that we are highly coupled to the machine on which we write code. Whether it is for development or when it comes to deploy a web service for clients, there is always a machine somewhere hosting the app. And the issue is that the systems and configurations are different..!

A container is a standard unit of software packaging code and its dependencies to quickly and reliably run an application from one isolated environnement to another. It's just like you let the client use your own computer.

What are the differences with a Virtual Machine?

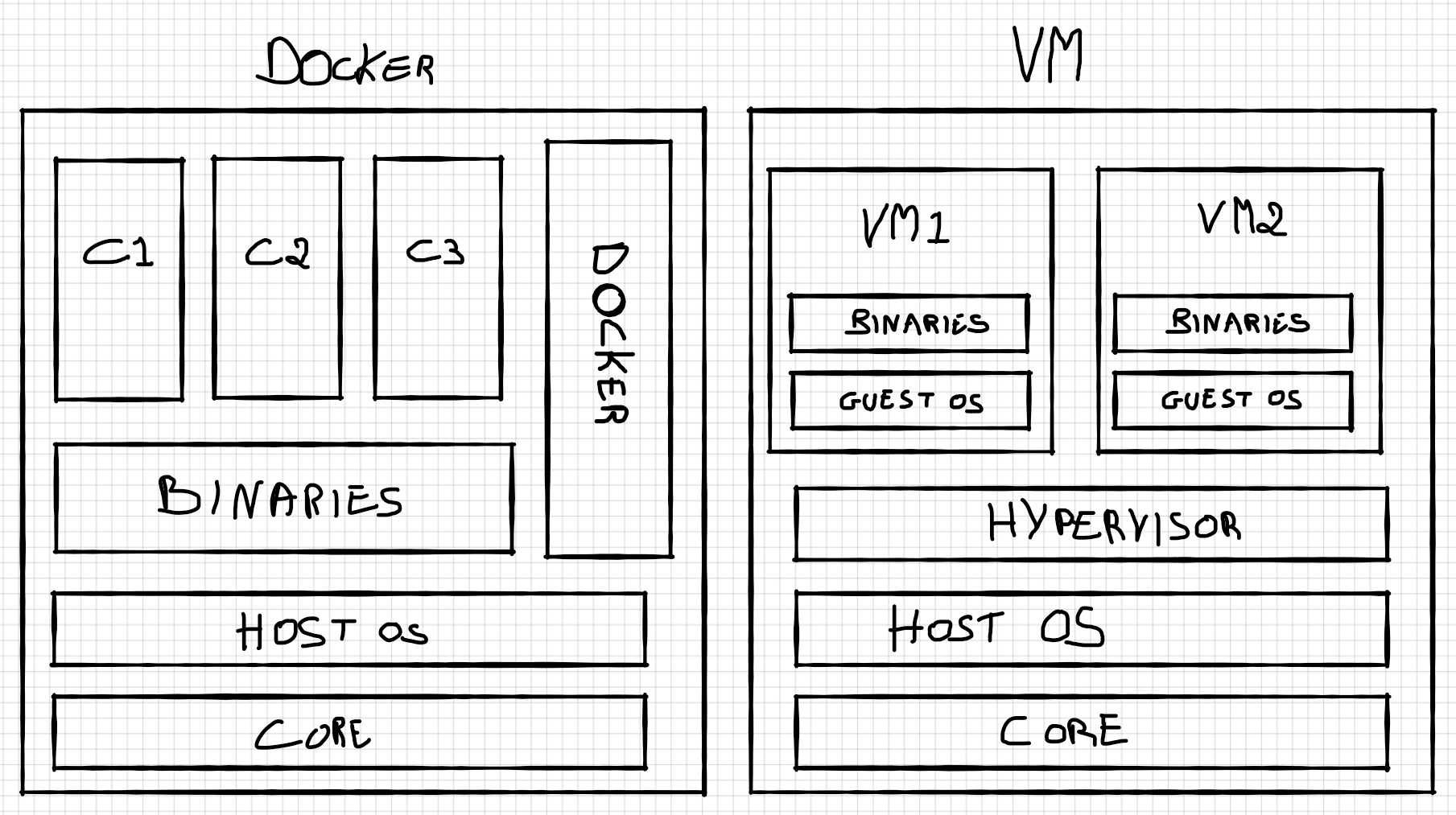

It is true that containers are often compared to virtual machines (VM) in that they have the same goal: run an app in an embedded environment. The big difference between each is that a container makes use of the host machine operating system (OS) whereas a VM loads its own OS.

In fact, a machine with Docker installed brings the following advantages:

- lightweightness and speed,

- portability and standardization, thus cross-platform (at some extent).

In reality, it is understandable. Let's take a look at this drawing:

It is a schema comparing a server with Docker installed to a server that handles VMs. Thanks to my excellent drawing skills, we can quickly understand why Docker offers lightweight and speed as assets: Docker is based on the host machine's installed OS and reuses its binaries. This means that the machine only has to load the containers packaging code, environment variables, etc. (so to speak, a few light things).

On the other hand, a system which uses VMs has a hypervisor which handles everything. This hypervisor indeed uses the host machine's installed OS. However, each VM ships with its own OS and binaries.

In conclusion, we can have a machine easily running 500 containers with a single OS. But it's not the same story with 500 VMs... Eventhough it's actually more complex to efficiently manage several containers simultaneously.

Yet, the power of Docker can also reveal itself as a weakness: it is not possible to run a container which depends on Windows on a Linux machine (and vice versa).

Another important problem that arises when using Docker is obviously the security! The containers may be isolated... but if even one of them is compromised, it is likely that the whole machine (and thus the entire set of containers) represents a threat.

How does it work?

I'm gonna lay the foundations, so that we speak with the same language. By the way, I am referencing Docker specically. It is made up of 3 components:

- the software, called Docker daemon, is a process managing Docker containers and resulting objects (eg: Docker Engine, ...)

- the objects, themselves seperated in 3 categories, are entities which allow to build an application with Docker:

- a container is a standard environment running an application.

- an image is a read-only template used to build a container. We can then say that an instance of an image is a container.

- a service is a system which orchestrates the operations of several containers managed by multiple daemons. It is also called a swarm (a set of Docker daemons which communicate via Docker - eg: Docker Swarm, Kubernetes, ...).

- the registries are repositories for Docker images (eg: Docker Hub, Google Cloud Platform, ...)

To create a container, you must follow some principles:

- a container only runs one process ;

- a container is immutable (it means that with the same environment variables, the container will run the same process) ;

- a container is disposable (it means that we can remove it, change it, ... as we like).

These 3 principles are just the most important in my humble opinion, but you may find the complete methodology on "The Twelve Factors" website.

Once you have an image locally (whether you took it from a registry or built it locally), you can create a container. I could show it to you, but as I don't reinvent the wheel, you will find a small video which explains it very well!

Why would you use Docker or any of its competitors?

The principle of containerisation brings significant benefits. Here's a non-exhaustive list:

- faster & easier onboarding of new human resources ;

- certitude that you and your colleagues work on the same environment (including the same version of tools) ;

- enforcement of the "Do Not Reinvent The Wheel" principle since an access to open-source images is possible ;

- faster building and destroying compared to VM ;

- consistency environmenet and isolation ;

- broad compatibility (the development machine OS does not matter, so everyone can work on their favorite station).

Containerizing applications is more and more common. We can even find a reason for this evolution in the growth undergone by the DevOps movement.

Conclusion

If you constantly have problems of environment configuration, if you must always wait at least 1 week to deploy a test in a client environment, if it is always complicated to onboard new people on a project... then you might consider using containers in your daily life. That said, I must say we have just scratched the surface of possibilities offered by Docker. I highly suggest you to keep documenting yourself on the topic.

And if you're looking for online documentation generation or you wish to start writing a blog, check out Docusaurus! 😁